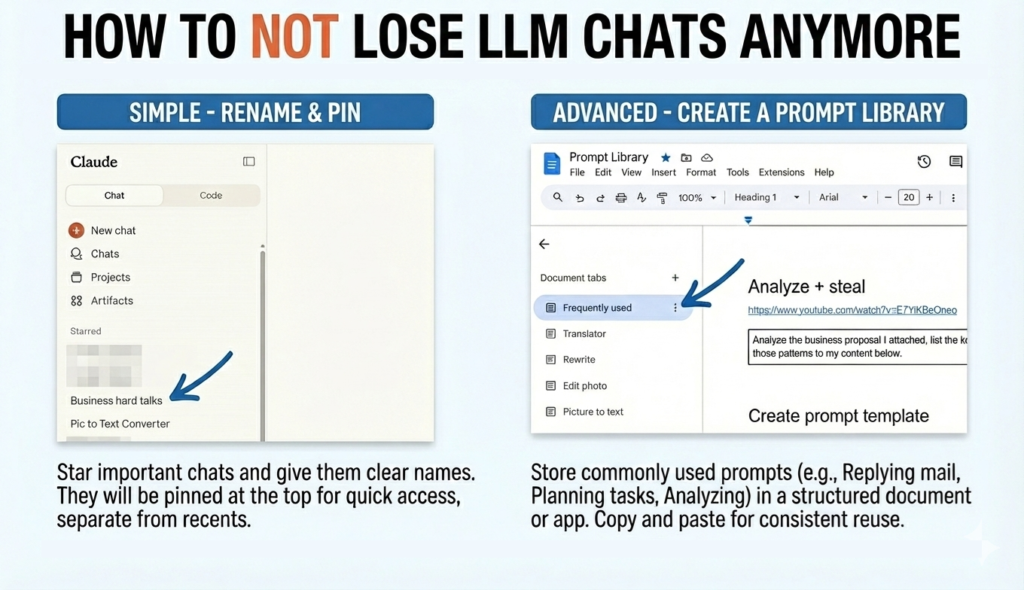

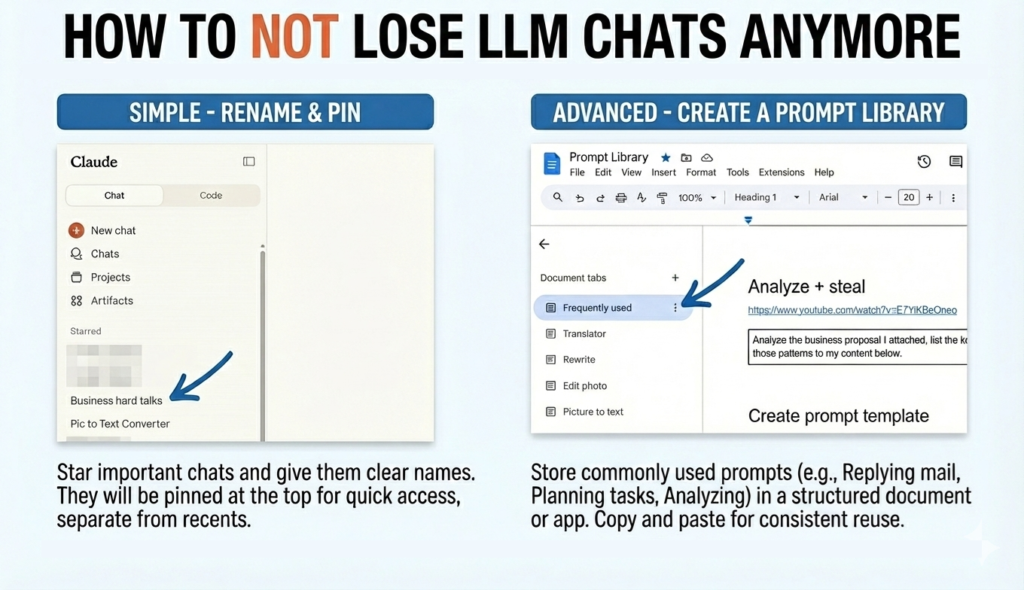

2 Ways to spend less time in searching chats in LLMs.

- Long chat – rename or pin for quick access.

- Prompts used occasionally – keep a prompt library.

2 Ways to spend less time in searching chats in LLMs.

Very often we over categorize things, usually at the start of the project, and that is unsustainable. For reasons:

1. Duplicated files: a file, or type of file, falls into two or more categories.

2. Unsearchable: everyone has a different category logic. I see apples in red and green, he sees apples from Japan and United States. Both are correct.

Product files and campaign files are related, but shouldn’t duplicate. Design files and product files are related, but shouldn’t duplicate.

Dynamic filtering is to build the correlation between two different document types.

Spending time on finding the AI chat from 2 weeks ago is the worse experience.

I found two ways to make that more manageable.

1. For long and deep dive threads, rename the chat, even pin for quick access.

2. For repeated prompts, Keep a prompt library.

If the statement of people transitioning from pipeline contributors to pipeline orchestrator is true, then how does it fundamentally change the recruitment process?

I think more time can be spent in understanding the AI pipeline of the candidate.

Interestingly, expertise and skillset are still the fundamental. It helps to distinguish the size of task that can be break down to and possible tool to test the hypothesis.

AI makes content faster and better; better logic, better story, long form, short form, and under substantially less time. It even knows what I’m trying to say before I can verbalize it clearly.

Is there still value to still do things manually?

Experience might be the value of being human. During the process, the how’s deepen in our memory. It become our very own context.

When it loops back, it gives us better judgement.

This image surprised me because I only have a vague visual idea when I described the statement, but it gave me an output with more depth of insight and clarity. It knew what I was trying to say before I said it clearly.

The image would have took multiple people and hours to complete, but it’s done in a minutes.

| Before | After |

| 1. Explaining the concept to the designer 2. Designer spends likely 2 to 3 days 3. Back and forth modification | 1. Voice input using wispr flow, for precise context input. 2. Restructure using Claude 3. Generate using Google Gemini 3 |

What’s the point of making slides anymore?

Completion of a project requires multiple SME, in the form of individual or group, to each handle a piece of it. But AI is the best SME, it made me rethink how I should recruit.

I think the future of work is about how minimize cost and efficiently link the task-specific LMs. First competitive factor is depth, to reach an outcome using an AI workflow that incorporates multiple AI tools. The second competitive factor is breadth, to scale the number of workflows to reach more quality outcomes.

In the making of a presentation, there’s the person with the core idea, the project manager who facilitates to different designers, and designers of specific domain. In the age of AI, it requires few people to orchestrate the AI workflow to reach the same (a lot of time, better) outcome.

Staying competitive might mean strengthening a handful of AI flows (for slides, videos, brochures, etc.).

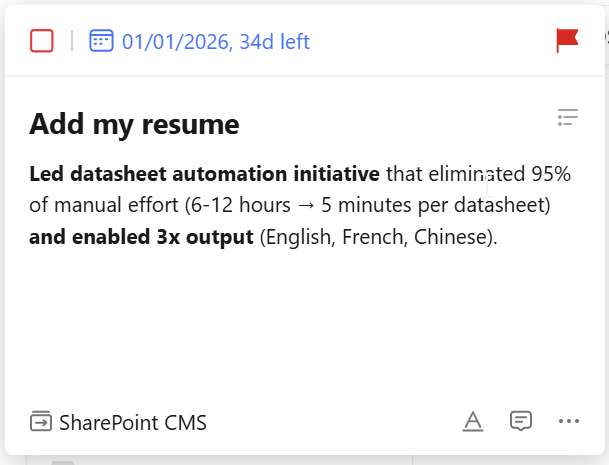

It’s difficult to know the value of the work that one does. It’s especially true when working as a part of a bigger project.

With LLM, I found a way have a better visibility of the end goal:

Act as an expert consultant in the field of_____. Base on your memory of my project _____, how much is the market value? How much would the alternative methods cost?

What I have found is I undervalue the work I do. Maybe this is a blindspot for people who works on a paycheck.

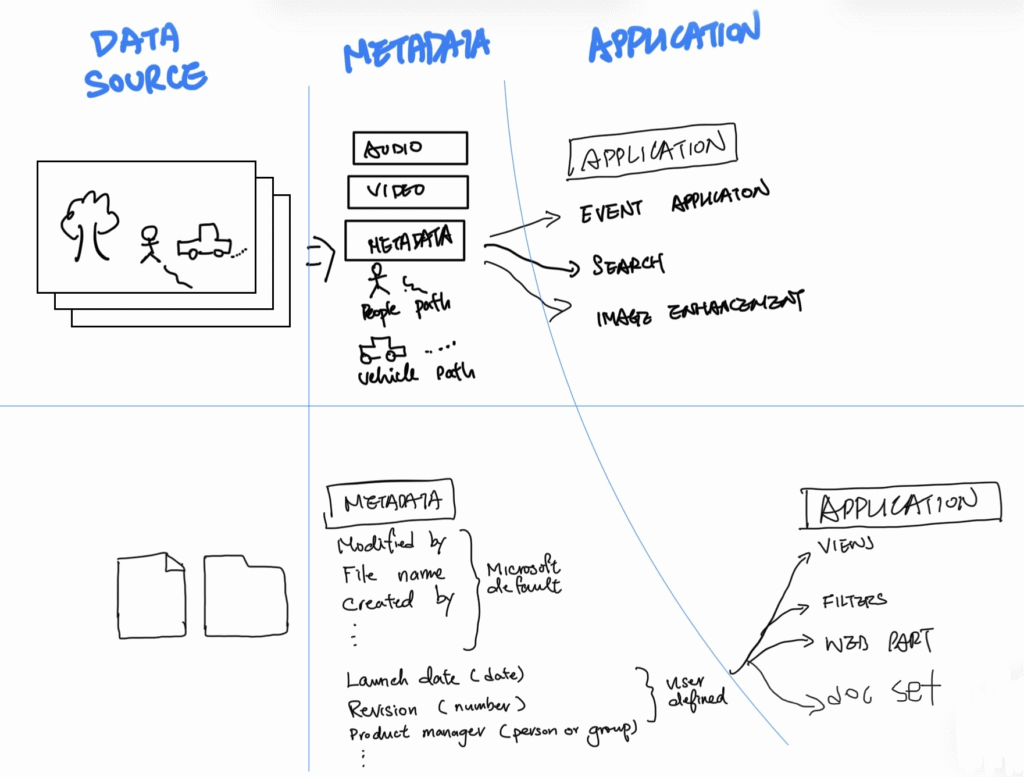

My first encounter with metadata was 2021, during the pandemic and the rise of CNN in SoC that performed much more efficient than machine learning. In short, the metadata in video surveillance focused on people and vehicle (often called ‘objects’): detecting and not detecting, the path, the appearance color, facial attribute, etc.

All in all, trying to provide better answer to:

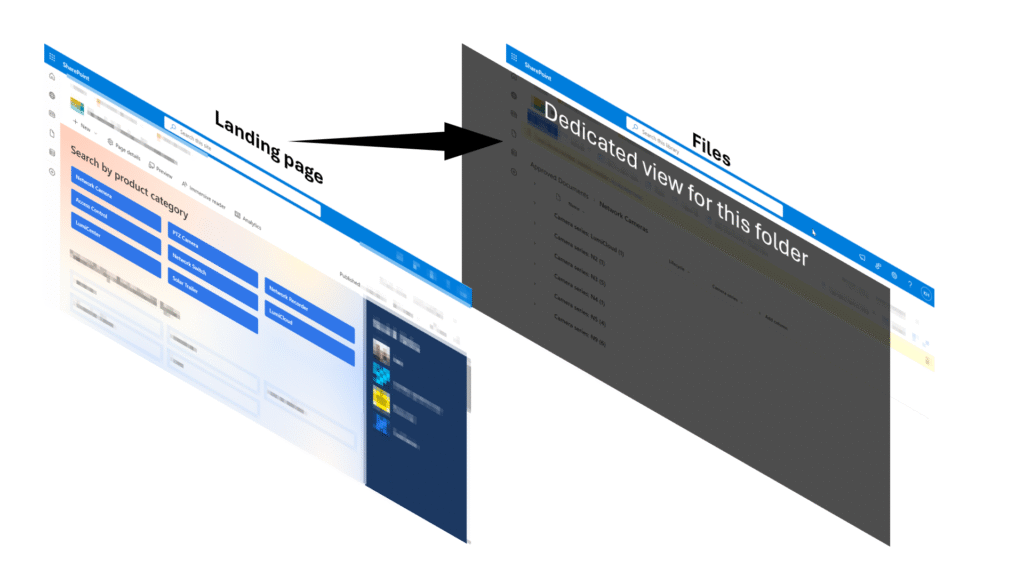

In SharePoint CMS project, I had the chance to look at metadata from a different angle: documents.

I think at the core, it’s locating the file faster through data and visual presentation.

Database structure doesn’t have to be the UX.

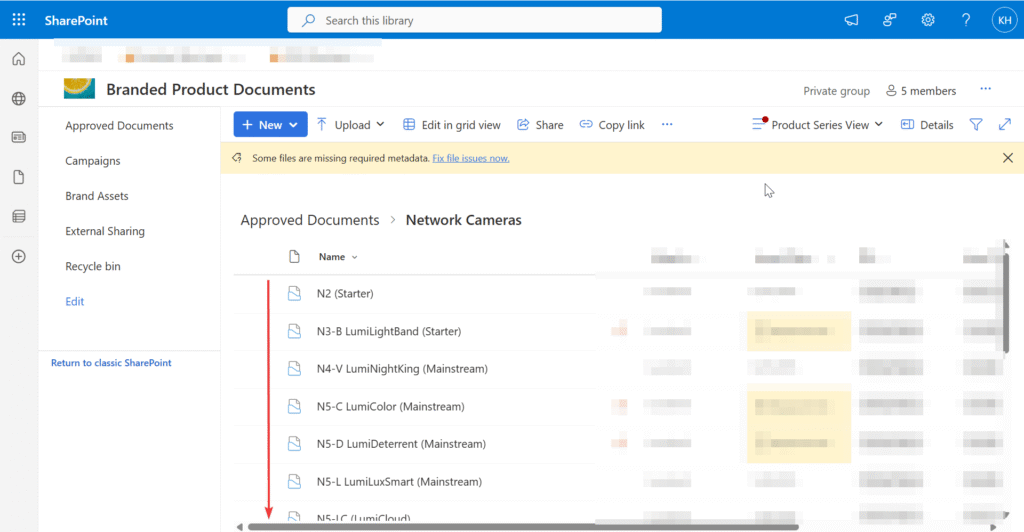

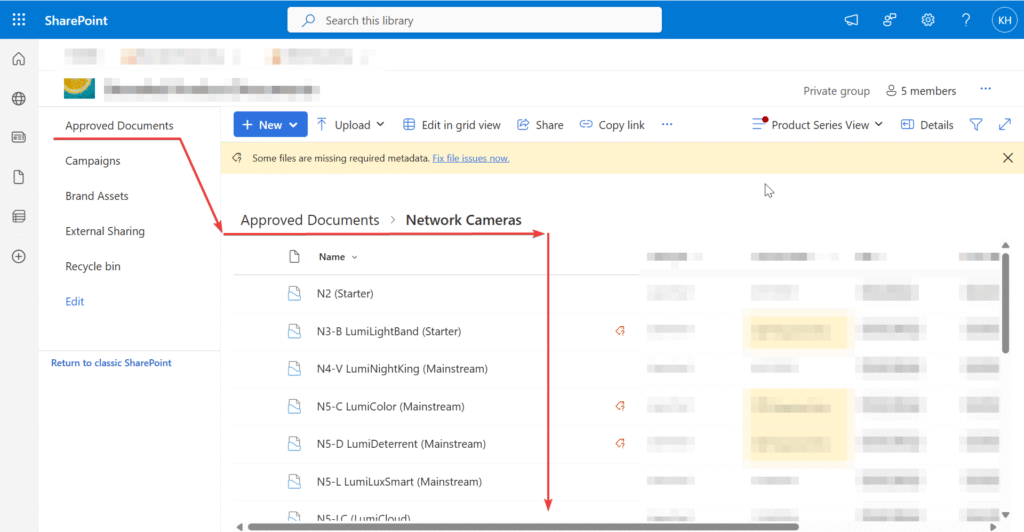

When Jennifer called, during pilot, about the flat view list of network cameras too long to navigate. It’s a valid point.

The problem was it only applied to ONE layer 2 folder, it’s an outlier so changing the default view effect all the other folders.

There seem to be a per-location view setting in classic SharePoint, mentioned here and here, but doesn’t seem to work in modern SharePoint.

[X] change default view

[X] per-location view

My problem was thinking the user navigation from the database path. I’m thinking in folder structure, it’s linear.

The workaround is using page and web part, mentioned in this reddit thread. It reminds me: